#GMSH PARALLEL SOFTWARE#

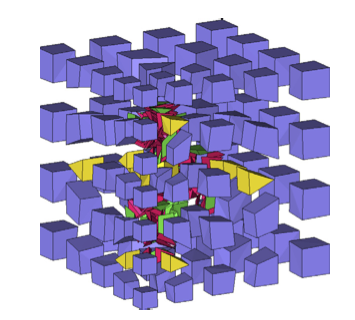

Wilcox, and Tobin Isaac AugAbstract We introduce the p4est software library for parallel adaptive mesh re nement (AMR). The GMSH recorder type is a whole model recorder, that is it is meant to record all the model geometry and metadata along with selecetd response quantities. Can you help me using a Gmsh mesh in OpenFOAM? If you can share any (tutorial) model for creating an OpenFOAM model using a Gmsh mesh, it would be also highly appreciated. The p4est software for parallel AMR Howto document and step-by-step examples Carsten Burstedde, Lucas C. I think the problem comes from gmshToFoam, the OpenFOAM mesh is not the same as Gmsh mesh. I tried to run icoFoam and it solved the model without problems, but the solution is not realistic. I studied the mesh in ParaView using paraFoam command and I noticed that the mesh is not the same and the boundary definitions are not correct.Ĩ. I copied some files for solving the model:Ĭp $FOAM_TUTORIALS/incompressible/icoFoam/elbow/system/fvS* system/Ĭp $FOAM_TUTORIALS/incompressible/icoFoam/elbow/constant/transportProperties constant/ħ. In file meshes/polyMesh/polyMeshFromShapeMesh.C at line 576įound 372 undefined faces in mesh adding to default patch.Ħ. I converted the Gmsh mesh to OpenFoam with this command:Īt this point I noticed a warning message:įrom function polyMesh::polyMesh(. And I wonder if it is a problem of double precision in the link between GMSH and Freefem or if it is a problem of numerical computations.GmshToFoam problem: not the same mesh in Gmsh vs. In order to keep the size in in Freefem regarding the physics constants in USI, it works…īut, when I use a model with 35 000 nodes (4 ddl/node) the results are ZERO everywhere. The smallest element size is about 10 nm and the biggest about 50 µm. In GMSH I have created a mesh with 20 000 nodes (4 ddl/node) with dimensions in (else GMSH fails, there is a thin layer of 50 nm). In fact, I wanted to understand this problem because I have a model that includes mesh element with a wide size range for microtech problems. However, the xdmf-format supports parallel IO, and we can thus read the mesh in parallel.

#GMSH PARALLEL HOW TO#

We will then in turn show how to use this data to generate discontinuous functions in DOLFINx. Thus, I keep the *.msh format with the 2.2 format as I use to do. Let us now consider the same problem, but using GMSH to generate the mesh and subdomains. The algorithm developed for one field can be easily applied and extended to models in the other fields. Thank you for your prompt and thorough response. So, I would be happy to understand and follow the best way to use GMSH and Freefem for 3D meshes. Select older mesh version, for compatibility of FF++Īs I read that the mesh read from gmsh is not correct for freefem and we should transform by the command tetg etc…įor the two last commands I have an error. With the help of Gmsh.jl, it is possible add parametric model construction. (3) Another information saw on a forum claims that we should take care of format following : Gmsh.jl contains API for Gmsh: a three-dimensional finite element mesh generator. mesh generators that partially meet the requirements: gmsh (GPL) 125, NETGEN. You can manually direct Gmsh to partition your mesh for you: gmsh -3 -nopopup -format msh -o sphere2.msh -part 8 sphere.msh or you can let FiPy handle it by passing in our. High performance parallel adaptive simulations operating on leadership. (2) As I read on an article of 2018, Freefem can’t read *.msh and only *.mesh?īut with correct use of Physical Volume or Surface it seems to me that *.msh work. We need to more clearly document how FiPy handles partitioning (or, more accurately, how FiPy gets Gmsh to handle partitioning). (1) I would like to understand what is the difference between : It is clear that the DistMesh (MATLAB m-code) algorithm is the most costly, while Gmsh (C++ code) falls in the middle, and Triangle (C code) and circgrid both are very fast. I have some doubts on the correct use of GMSH and Freefem for 3D meshes.

0 kommentar(er)

0 kommentar(er)